Expert warns that OpenAI displays a ‘very dangerous mindset’ concerning safety.

An AI expert has accused OpenAI of revising its own history and being excessively dismissive of safety concerns. Former OpenAI policy researcher Miles Brundage criticized the company’s recent safety and alignment document released this week. This document portrays OpenAI as working toward artificial general intelligence (AGI) through a series of small steps rather than a “single major leap,” indicating that this gradual deployment approach will help identify safety issues and assess the potential for AI misuse at each phase.

Amid various criticisms of AI technologies like ChatGPT, experts are particularly worried that chatbots may provide misleading information about health and safety (similar to the notorious issue with Google’s AI search feature that misguided users about eating rocks) and could be exploited for political manipulation, misinformation, and scams. OpenAI, in particular, has faced backlash for its lack of transparency regarding how it develops its AI models, which may contain sensitive personal information.

The publication of the OpenAI document appears to be a response to these worries, suggesting that the development of the earlier GPT-2 model was “discontinuous” and was initially withheld due to “concerns about malicious applications,” but now the company intends to adopt a principle of iterative development moving forward. However, Brundage argues that the document distorts the narrative and does not accurately reflect the history of AI development at OpenAI.

“OpenAI’s release of GPT-2, in which I participated, was entirely consistent with and anticipated OpenAI’s current philosophy of iterative deployment,” Brundage posted on X. “The model was released stepwise, with lessons shared at every stage. Many security experts at the time expressed gratitude for this prudence.”

Brundage further criticized the company's apparent attitude toward risk as outlined in the document, stating, “It seems like a burden of proof is being established in this section, where concerns are deemed alarmist and require overwhelming evidence of imminent threats to act upon them—otherwise, the message is to keep releasing products. This mentality is highly risky for advanced AI systems.”

This criticism arises as OpenAI faces growing scrutiny over claims that it prioritizes “flashy products” over safety.

Georgina has been a space writer at Digital Trends for six years, covering human space exploration, planets, and more.

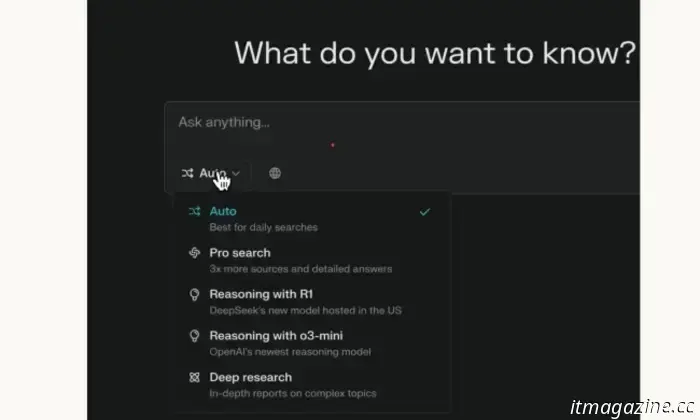

Perplexity surpasses Gemini and ChatGPT by offering a remarkable AI service for free.

What happens if you instruct an AI chatbot to search the web, find a specific type of source, and then compile a comprehensive report based on the information gathered? Gemini can do this for $20 a month, or $200 monthly if you choose ChatGPT. Meanwhile, Perplexity offers this service for free several times each day. Perplexity has dubbed its new tool Deep Research, similar to offerings from OpenAI and Google Gemini.

Elon Musk has stated he will not pursue OpenAI with his billions as long as it remains a non-profit.

Elon Musk, a founding member of OpenAI, exited the organization before the emergence of ChatGPT on unsatisfactory terms. He expressed discontent with the non-profit’s shift towards a profit-driven business model. Recently, Musk made a bid of $97.4 billion to acquire OpenAI’s non-profit arm but has now indicated he will withdraw the offer if the AI giant continues its for-profit trajectory.

“If the OpenAI board is willing to uphold the charity’s mission and agree to remove the “for sale” sign from its assets by halting its shift to profit, Musk will retract the bid,” stated a court filing from the billionaire’s attorney, as reported by Reuters.

OpenAI has canceled its o3 model release and instead will launch ‘GPT-5’.

OpenAI CEO Sam Altman announced via a post on X on Wednesday that the company’s o3 model has been sidelined in favor of a “simplified” GPT-5, which is expected to be released in the next few months.

Other articles

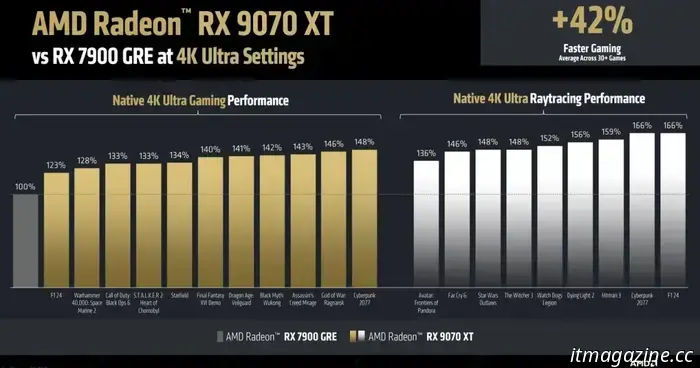

AMD achieved it! Now we must continue to exert pressure for price reductions.

Price reductions are what graphics cards require more than anything else at this moment, even with AMD's new mid-range affordable choices. They should all be more affordable than their current prices.

AMD achieved it! Now we must continue to exert pressure for price reductions.

Price reductions are what graphics cards require more than anything else at this moment, even with AMD's new mid-range affordable choices. They should all be more affordable than their current prices.

.jpg) 3 must-watch Hulu movies to stream this weekend (March 7-9)

Our selections for the weekend include a story from Hollywood's distant past, a gripping dark drama, and a laughably silly comedy—three fantastic movies to stream on Hulu.

3 must-watch Hulu movies to stream this weekend (March 7-9)

Our selections for the weekend include a story from Hollywood's distant past, a gripping dark drama, and a laughably silly comedy—three fantastic movies to stream on Hulu.

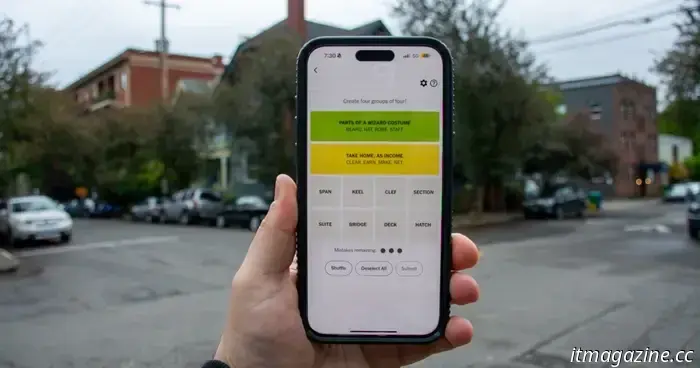

NYT Connections: clues and solutions for Friday, March 7.

Connections is the latest puzzle game from the New York Times, and it can be pretty challenging. If you require assistance in solving today's puzzle, we're here to support you.

NYT Connections: clues and solutions for Friday, March 7.

Connections is the latest puzzle game from the New York Times, and it can be pretty challenging. If you require assistance in solving today's puzzle, we're here to support you.

Instagram may soon become even more hectic with the introduction of Community Chats.

Meta is reportedly looking to transform Instagram into a more vibrant platform by introducing a new community conversation feature akin to those found in Telegram and Discord.

Instagram may soon become even more hectic with the introduction of Community Chats.

Meta is reportedly looking to transform Instagram into a more vibrant platform by introducing a new community conversation feature akin to those found in Telegram and Discord.

.jpg) Three overlooked Netflix films to check out this weekend (March 7–9)

Searching for lesser-known movies to enjoy this weekend? Netflix offers a diverse selection of hidden treasures, featuring indie dramas, distinctive anthologies, and exciting films.

Three overlooked Netflix films to check out this weekend (March 7–9)

Searching for lesser-known movies to enjoy this weekend? Netflix offers a diverse selection of hidden treasures, featuring indie dramas, distinctive anthologies, and exciting films.

The price of AMD's RX 9070 XT may increase significantly soon.

According to a retailer, AMD's RX 9000 series might be available at MSRP right now, but this situation is unlikely to continue for long.

The price of AMD's RX 9070 XT may increase significantly soon.

According to a retailer, AMD's RX 9000 series might be available at MSRP right now, but this situation is unlikely to continue for long.

Expert warns that OpenAI displays a ‘very dangerous mindset’ concerning safety.

Miles Brundage, a former policy researcher at OpenAI, has taken issue with the organization for altering its historical narrative and being too dismissive of safety issues.