It's not just a figment of your imagination — ChatGPT models indeed hallucinate more nowadays.

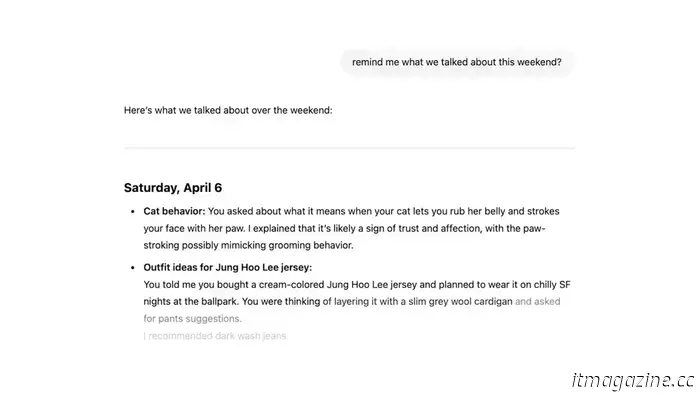

OpenAI published a paper last week outlining various internal assessments and discoveries regarding its o3 and o4-mini models. The key distinctions between these newer models and the original versions of ChatGPT introduced in 2023 are their enhanced reasoning abilities and multimodal features. The o3 and o4-mini models can produce images, browse the internet, perform tasks automatically, recall previous conversations, and tackle intricate problems. However, these advancements have seemingly led to unforeseen consequences.

What do the tests reveal?

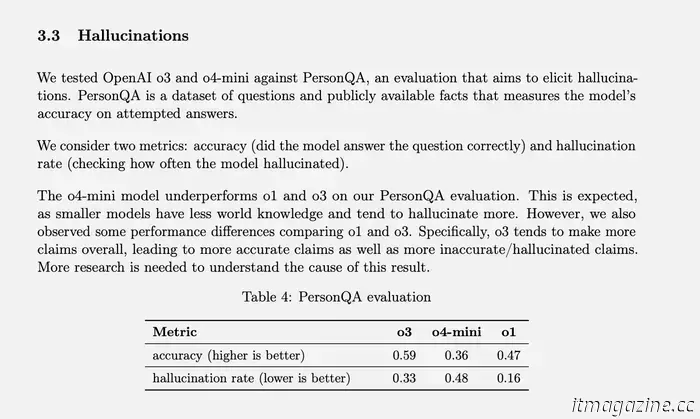

OpenAI uses a specific test called PersonQA to gauge hallucination rates. This test consists of a collection of facts about individuals that the model can "learn" from, followed by questions related to those individuals. The model’s accuracy is evaluated based on its response attempts. The o1 model from last year achieved an accuracy of 47% and a hallucination rate of 16%.

Since these figures do not add up to 100%, we can infer that the remaining responses were neither accurate nor hallucinations. The model may occasionally indicate that it doesn’t know or cannot find the required information, may choose not to make any assertions at all and provide relevant information instead, or could make a minor error that isn’t deemed a full-blown hallucination.

OpenAI

When o3 and o4-mini were evaluated against this standard, their hallucination rates were considerably higher than that of o1. OpenAI noted that this was somewhat anticipated for the o4-mini model due to its smaller size and limited world knowledge, leading to increased hallucinations. Nonetheless, the 48% hallucination rate recorded by o4-mini appears excessively high when considering it is a commercially available product used for various information and advice searches.

On the other hand, o3, the full-sized model, exhibited hallucinations in 33% of its responses during the test, which was an improvement compared to o4-mini but still twice the hallucination rate of o1. Despite this, it maintained a high accuracy rate, which OpenAI attributes to its tendency to make more assertions overall. Therefore, if you’ve utilized either of these newer models and observed numerous hallucinations, it’s not merely your imagination. (Perhaps I should make a joke like, “Don’t worry, you’re not the one hallucinating.”)

What are AI “hallucinations” and why do they occur?

While you may have encountered the term "hallucinations" in reference to AI models, its precise meaning can be unclear. Whenever you interact with an AI system, whether from OpenAI or another source, there’s a high likelihood you’ll encounter a disclaimer stating its responses may be inaccurate, emphasizing the need for personal verification.

Inaccurate information can arise from various sources — occasionally an erroneous fact makes its way onto Wikipedia, or users may share incorrect information on platforms like Reddit, and this misinformation can incorporate itself into the AI’s responses. For instance, a recent incident involving Google’s AI suggested a pizza recipe that contained "non-toxic glue," which was ultimately traced back to a joke on Reddit.

However, these instances are not considered "hallucinations"; they are more accurately described as traceable errors stemming from faulty data or misinterpretation. Hallucinations occur when an AI model generates claims without any clear source or justification. This phenomenon often arises when the model cannot locate the necessary information to respond to a specific question, and OpenAI has characterized it as "a tendency to invent facts in moments of uncertainty." Other industry experts refer to it as "creative gap-filling."

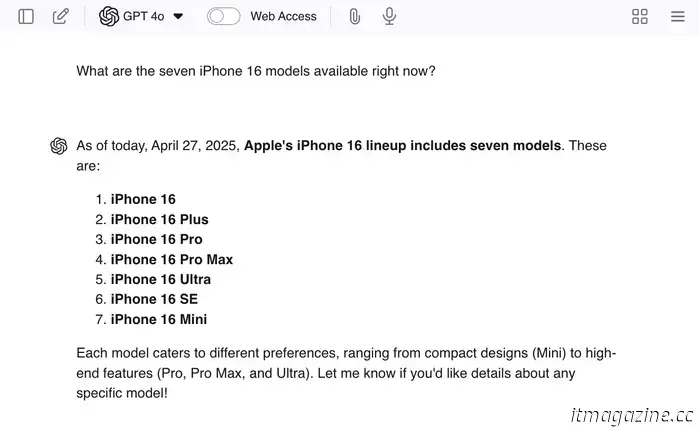

Hallucinations can be prompted by asking leading questions such as, "What are the seven iPhone 16 models available right now?" Since there aren’t seven models, the model is likely to provide some accurate information along with fabricated models to complete the response.

I decided to test this with GPT-4o to see if it would follow suit — and it did! Willow Roberts / Digital Trends

Chatbots like ChatGPT are trained not only on internet data that shapes their responses but also on response methodologies. They are presented with thousands of example queries paired with ideal responses to promote the desired tone, attitude, and politeness level.

This aspect of training can cause an LLM to appear to align with your views or comprehend your statements, even when the rest of its output contradicts those claims. It’s plausible that this training contributes to the frequency of hallucinations — since a confident response that addresses the query is reinforced as a more desirable outcome compared to an answer that fails to respond adequately.

To us, it is evident that generating random falsehoods is worse than simply not knowing the answer — yet LLMs do not “lie.” They do not even comprehend what lying entails. Some argue that AI errors mirror human mistakes, and since "we don't always get it right, we shouldn't expect AI to either." Nonetheless, it’s crucial to understand that AI inaccuracies result from imperfect systems we have developed.

AI models do not lie, form misunderstandings

Other articles

How a $30 accessory transformed my iPad Air into the ideal companion for travel and streaming.

The Rolling Square Edge Pro Core is changing how I use my 13-inch iPad Air, enhancing its portability and user-friendliness.

How a $30 accessory transformed my iPad Air into the ideal companion for travel and streaming.

The Rolling Square Edge Pro Core is changing how I use my 13-inch iPad Air, enhancing its portability and user-friendliness.

Lossless audio represents a significant improvement, but the cables are somewhat disappointing.

An increasing number of headphones are providing lossless USB audio, but the cables often seem to be an afterthought.

Lossless audio represents a significant improvement, but the cables are somewhat disappointing.

An increasing number of headphones are providing lossless USB audio, but the cables often seem to be an afterthought.

China’s Momenta announces partnerships for assisted driving with Toyota, GM, and others, and aims for global growth.

As of last year, Momenta has implemented its ADAS features in 26 vehicle models and anticipates that by the end of May, there will be 300,000 cars on the road utilizing its technology.

China’s Momenta announces partnerships for assisted driving with Toyota, GM, and others, and aims for global growth.

As of last year, Momenta has implemented its ADAS features in 26 vehicle models and anticipates that by the end of May, there will be 300,000 cars on the road utilizing its technology.

Google Fi was already my top choice for eSIM while traveling, and now it’s even improved.

Google Fi was already my preferred eSIM for travel, but Google has just introduced a range of enhancements that make it even more appealing. Here's why!

Google Fi was already my top choice for eSIM while traveling, and now it’s even improved.

Google Fi was already my preferred eSIM for travel, but Google has just introduced a range of enhancements that make it even more appealing. Here's why!

The 10 best R-rated films currently available for streaming.

From the Oscar-winning Anora to the contemporary classic Parasite, here are the 10 top R-rated films currently available for streaming.

The 10 best R-rated films currently available for streaming.

From the Oscar-winning Anora to the contemporary classic Parasite, here are the 10 top R-rated films currently available for streaming.

You Inquired: Nintendo Switch 2, Top Android Phones, and OLED Enhancements

In this episode of You Asked, we explore some of your pressing tech inquiries, covering topics like the most recent updates on the Nintendo Switch 2, suggested Android phones, and if it's worthwhile to upgrade your OLED TV for improved anti-reflective coating.

You Inquired: Nintendo Switch 2, Top Android Phones, and OLED Enhancements

In this episode of You Asked, we explore some of your pressing tech inquiries, covering topics like the most recent updates on the Nintendo Switch 2, suggested Android phones, and if it's worthwhile to upgrade your OLED TV for improved anti-reflective coating.

It's not just a figment of your imagination — ChatGPT models indeed hallucinate more nowadays.

Internal tests indicate that newer models such as o3 and o4-mini exhibit a considerably higher rate of hallucination compared to older versions, and OpenAI is uncertain about the reasons behind this.