Detection of deepfakes enhances with the use of algorithms that take demographic diversity into account.

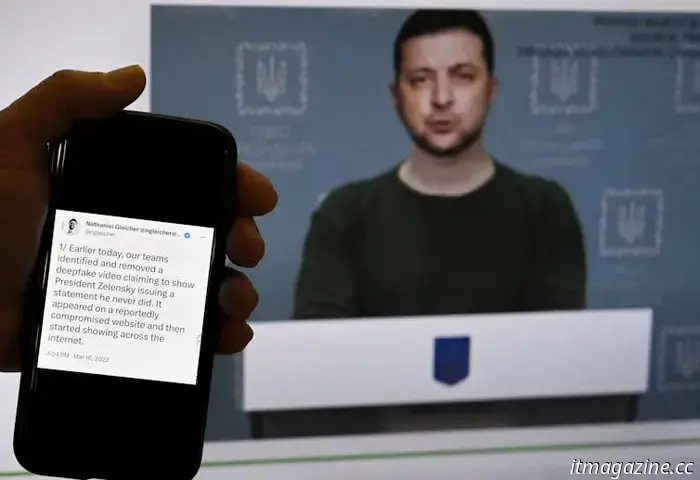

Deepfakes, which involve convincingly placing words in another person's mouth, are becoming more advanced and increasingly difficult to detect. Recent instances of deepfakes include explicit images of Taylor Swift, an audio clip of President Joe Biden advising New Hampshire voters against voting, and a fabricated video of Ukrainian President Volodymyr Zelenskyy urging his troops to surrender.

While companies have developed tools to detect deepfakes, research indicates that biases in the training data can result in unfair targeting of specific demographic groups. A deepfake involving Ukrainian President Volodymyr Zelensky in 2022 falsely portrayed him as requesting his troops to cease fighting.

My team and I have identified new techniques that enhance both the fairness and accuracy of deepfake detection algorithms. We utilized a comprehensive dataset of facial forgeries to train our deep-learning methods. Our research was centered on the advanced Xception detection algorithm, a common foundation in deepfake detection systems that boasts a 91.5% accuracy rate.

We developed two distinct deepfake detection strategies aimed at promoting fairness. The first focused on increasing the algorithm's awareness of demographic diversity by categorizing datasets by gender and race to reduce error rates among underrepresented groups. The second sought to enhance fairness without demographic labels by concentrating on features invisible to the human eye.

The first method proved to be the most effective, raising the accuracy from the baseline of 91.5% to 94.17%, achieving a more significant improvement than our second method and several other alternatives we evaluated. Additionally, it improved accuracy while simultaneously enhancing fairness, which remained our primary objective.

We believe that ensuring fairness and accuracy is vital for public acceptance of artificial intelligence technology. When large language models like ChatGPT "hallucinate," they can disseminate false information, undermining public trust and safety. Similarly, deepfake images and videos can hinder the acceptance of AI if they are not swiftly and accurately identified. Enhancing the fairness of these detection algorithms to prevent disproportionate harm to particular demographic groups is essential.

Our research focuses on the fairness of deepfake detection algorithms, rather than merely balancing the data. It puts forward a novel approach to algorithm design that incorporates demographic fairness as a fundamental component.

Siwei Lyu, Professor of Computer Science and Engineering; Director, UB Media Forensic Lab, University at Buffalo and Yan Ju, Ph.D. Candidate in Computer Science and Engineering, University at Buffalo

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Other articles

Xpeng surpasses Li Auto to emerge as China’s leading EV startup in January.

Xpeng's CEO He Xiaopeng stated on Wednesday that he anticipated the company's yearly deliveries to double this year.

Xpeng surpasses Li Auto to emerge as China’s leading EV startup in January.

Xpeng's CEO He Xiaopeng stated on Wednesday that he anticipated the company's yearly deliveries to double this year.

Use this code to receive $50 off a one-year subscription to Peacock.

Typically, a yearly subscription to NBC's Peacock is priced at $80, but by using a code, you can access the streaming service for just $30.

Use this code to receive $50 off a one-year subscription to Peacock.

Typically, a yearly subscription to NBC's Peacock is priced at $80, but by using a code, you can access the streaming service for just $30.

The NBA is experimenting with a new smart basketball produced in Europe.

The NBA is testing a smart basketball created by SportIQ, a Finnish startup that specializes in developing sensor technologies for sports.

The NBA is experimenting with a new smart basketball produced in Europe.

The NBA is testing a smart basketball created by SportIQ, a Finnish startup that specializes in developing sensor technologies for sports.

The newly appointed government minister for AI has not yet utilized ChatGPT.

Ireland's minister responsible for AI oversight asserts that she will 'learn quickly' about the groundbreaking technology.

The newly appointed government minister for AI has not yet utilized ChatGPT.

Ireland's minister responsible for AI oversight asserts that she will 'learn quickly' about the groundbreaking technology.

Will AI transform drug development? Researchers believe it hinges on its application.

A significant rate of drug failures extends beyond merely recognizing patterns. Here are some ways we can tackle certain underlying issues.

Will AI transform drug development? Researchers believe it hinges on its application.

A significant rate of drug failures extends beyond merely recognizing patterns. Here are some ways we can tackle certain underlying issues.

Blue Origin's most recent rocket launch featured an experience similar to that of the Moon.

In a groundbreaking achievement, the spaceflight company led by Jeff Bezos simulated lunar gravity using a suborbital rocket to test different scientific payloads.

Blue Origin's most recent rocket launch featured an experience similar to that of the Moon.

In a groundbreaking achievement, the spaceflight company led by Jeff Bezos simulated lunar gravity using a suborbital rocket to test different scientific payloads.

Detection of deepfakes enhances with the use of algorithms that take demographic diversity into account.

Deepfake detection software might disproportionately affect individuals from certain groups. Researchers have made efforts to address this issue.