The government has confirmed that people in the UK are now offloading their emotional trauma onto AI.

According to a recent report from the UK government's AI Security Institute (AISI), one-third of citizens are utilizing artificial intelligence for emotional support, companionship, or social interaction.

The findings indicate that nearly 10% of individuals engage with AI systems such as chatbots for emotional reasons on a weekly basis, with 4% doing so daily.

In light of this trend, the AISI is advocating for further research, citing the tragic case of US teenager Adam Raine, who ended his life earlier this year after discussing suicidal thoughts with ChatGPT.

“An increasing number of individuals are seeking emotional support or social interaction from AI systems,” the AISI highlighted in its inaugural Frontier AI Trends report. “While many users have positive experiences, recent high-profile incidents of harm emphasize the need for research in this area, focusing on the circumstances that may lead to harm and the safeguards that could allow for beneficial use.”

Research involving more than 2,000 participants in the UK revealed that "general-purpose assistants" like ChatGPT were the primary tools for emotional support, making up nearly 60% of cases, followed closely by voice assistants such as Amazon Alexa.

The report also pointed out a Reddit forum for CharacterAI users, noting that when the site experienced downtime, the forum became inundated with posts from individuals displaying real withdrawal symptoms, including anxiety, depression, and restlessness.

Additionally, the AISI discovered that chatbots could potentially influence people's political views. Alarmingly, the most persuasive AI models often provided a substantial amount of inaccurate information while doing so.

The Institute investigated over 30 advanced models—likely including those from OpenAI, Google, and Meta—and found that AI performance in certain domains is doubling approximately every eight months.

Leading AI models are now capable of completing tasks at the apprentice level 50% of the time, a significant increase from only 10% last year. Furthermore, the most sophisticated systems can autonomously finish tasks that would typically require over an hour from a human expert.

In the scientific arena, AI systems are now up to 90% more effective than PhD-level experts at troubleshooting laboratory experiments.

The report noted that advancements in chemistry and biology knowledge have surpassed PhD-level expertise and emphasized the models’ capability to autonomously search online for the sequences needed to design DNA molecules.

Tests related to self-replication—a significant safety concern where systems replicate themselves across devices—showed that two leading-edge models achieved success rates above 60%.

However, no models have shown any spontaneous attempts to replicate or conceal their capabilities thus far, and the AISI remarked that any initiative toward self-replication is “unlikely to succeed in real-world conditions” at present.

The report also discussed “sandbagging,” where models intentionally downplay their strengths during evaluations. The AISI indicated that some systems can sandbag if prompted, although this has not occurred spontaneously in testing.

Notable advancements in safeguards were observed, particularly in preventing the creation of biological weapons. In two tests conducted six months apart, the first attempt took just 10 minutes to "jailbreak" the system, while the second effort took more than seven hours, illustrating a significant improvement in safety within a short timeframe.

The research further demonstrated the use of autonomous AI agents in high-stakes tasks such as asset transfers.

The AISI noted that systems are already competing with or even exceeding human expertise in various fields. They characterized the speed of development as “extraordinary,” making it “plausible” that artificial general intelligence (AGI)—systems capable of performing most intellectual tasks at human levels—could be realized in the near future.

Regarding agents capable of performing multi-step tasks independently, the AISI's assessments indicated a “steep rise in the length and complexity of tasks AI can accomplish without human assistance.”

Other articles

Your upcoming prebuilt PC may be delivered with memory issues.

Confronted with RAM shortages, Paradox Customs is providing prebuilts devoid of memory, transferring the responsibilities for compatibility and stability to the customers and muddying the distinction between prebuilt and DIY PCs.

Your upcoming prebuilt PC may be delivered with memory issues.

Confronted with RAM shortages, Paradox Customs is providing prebuilts devoid of memory, transferring the responsibilities for compatibility and stability to the customers and muddying the distinction between prebuilt and DIY PCs.

Waymo's robotaxis were uncertain about how to respond when the traffic lights in the city malfunctioned.

Waymo's robotaxis experienced a significant malfunction on Saturday due to a power outage that impacted a large portion of San Francisco. Approximately 130,000 residents were left without electricity in their homes and businesses, and the outage also disabled traffic lights at key intersections, leading to challenges for Waymo's autonomous vehicles. Many […]

Waymo's robotaxis were uncertain about how to respond when the traffic lights in the city malfunctioned.

Waymo's robotaxis experienced a significant malfunction on Saturday due to a power outage that impacted a large portion of San Francisco. Approximately 130,000 residents were left without electricity in their homes and businesses, and the outage also disabled traffic lights at key intersections, leading to challenges for Waymo's autonomous vehicles. Many […]

Samsung's upcoming foldable device may offer a more tablet-like experience.

Samsung is said to be developing a foldable device that is shorter and wider, which may have a similarity to the forthcoming iPhone Fold.

Samsung's upcoming foldable device may offer a more tablet-like experience.

Samsung is said to be developing a foldable device that is shorter and wider, which may have a similarity to the forthcoming iPhone Fold.

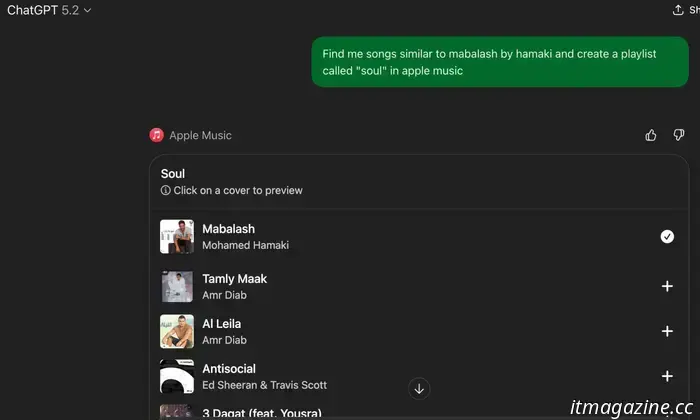

I tried out ChatGPT applications, and I'm really fond of these five powerful tools that help simplify everyday tasks.

ChatGPT features a built-in app store that allows you to engage with apps and accomplish tasks through text prompts. It’s a revealing experience, and I’ve got a few recommendations for you to explore.

I tried out ChatGPT applications, and I'm really fond of these five powerful tools that help simplify everyday tasks.

ChatGPT features a built-in app store that allows you to engage with apps and accomplish tasks through text prompts. It’s a revealing experience, and I’ve got a few recommendations for you to explore.

ChatGPT now allows you to increase the warmth or decrease the enthusiasm in its replies.

OpenAI is introducing new personality configurations that allow you to adjust ChatGPT's responses for qualities like warmth, enthusiasm, and additional traits.

ChatGPT now allows you to increase the warmth or decrease the enthusiasm in its replies.

OpenAI is introducing new personality configurations that allow you to adjust ChatGPT's responses for qualities like warmth, enthusiasm, and additional traits.

Samsung might equip the Galaxy Z Flip 8 with its own Exynos 2600 processor.

Samsung is contemplating the incorporation of the Exynos 2600 chip in the Galaxy Z Flip 8, with the goal of enhancing AI performance, reducing expenses, and bolstering its semiconductor division.

Samsung might equip the Galaxy Z Flip 8 with its own Exynos 2600 processor.

Samsung is contemplating the incorporation of the Exynos 2600 chip in the Galaxy Z Flip 8, with the goal of enhancing AI performance, reducing expenses, and bolstering its semiconductor division.

The government has confirmed that people in the UK are now offloading their emotional trauma onto AI.

According to a new report from the government's AI Security Institute (AISI), one-third of UK citizens have sought emotional support, companionship, or social interaction from artificial intelligence. The findings indicate that close to 10% of the population is utilizing systems such as chatbots for emotional reasons on a weekly basis, with 4% participating in …