How WeTransfer sparked concerns regarding the training of AI on user data.

Dutch file-sharing platform WeTransfer is facing backlash after users noticed significant updates to its terms of service, which seemed to allow the company to use uploaded files to train AI models. Although the company has since removed the contentious language, users are still upset. Here’s an overview of the situation and its implications.

What changes did WeTransfer make?

Users of WeTransfer found out this week that the service had modified its terms to include a clause permitting it a perpetual, royalty-free license to utilize user-uploaded content, including for “improving machine learning models that enhance content moderation.” These changes were set to take effect on August 8.

The wording was ambiguous enough that many users—including children’s author Sarah McIntyre and comedian Matt Lieb—felt it allowed WeTransfer to use or even sell their files to train AI without obtaining permission or providing compensation.

How is this acceptable, @WeTransfer? You're not a free service; I *pay* you to transfer my large artwork files.

I DO NOT pay you to claim rights to use them to train AI or print, sell, and distribute my artwork, effectively competing with me using my own creations. 😡 pic.twitter.com/OHPIjRGGOM

— Sarah McIntyre (@jabberworks) July 15, 2025

How did WeTransfer address the situation?

On Tuesday afternoon, WeTransfer quickly attempted to quell the controversy, stating in a press release that it does not use user content for AI training, nor does it sell or share files with third parties. The company mentioned it considered the potential of using AI to “improve content moderation” in the future but clarified that such functionality “hasn’t been built or deployed in practice.”

WeTransfer has also revised its terms of service to eliminate references to machine learning. The updated version specifies that users grant the company “a royalty-free license” to use their content for “operating, developing, and improving the service.”

However, the trust of users may already be compromised.

Why are users concerned?

WeTransfer joins a growing list of companies receiving criticism for training AI systems with user data. Adobe, Zoom, Slack, and Dropbox, among others, have also recently either retracted or clarified similar AI-related policies following public backlash. These cases tap into broader frustrations regarding copyright and consent in the era of AI, highlighting a significant trust gap between users and technology companies.

WeTransfer has long positioned itself as a creative, privacy-minded file-sharing service. Therefore, the vague language regarding AI and extensive licensing rights felt like a betrayal to its users, particularly artists and freelancers concerned that their work could be silently integrated into machine learning models without their approval.

Despite WeTransfer’s clarification of its terms, many users believe the damage has already occurred. In responses to the company’s official announcement on X, some indicated that it seemed as if the service had tested the waters for broader AI permissions, faced immediate public outcry, and then hurriedly retracted its stance.

WeTransfer is unlikely to be the last tech company embroiled in such a controversy. As excitement over AI grows, user data is becoming increasingly valuable.

Other articles

This cargo vessel is converting its CO2 emissions into eco-friendly cement.

UK startup Seabound claims to have developed the world’s first commercial carbon capture system designed for cargo ships. This system is currently operational at sea.

This cargo vessel is converting its CO2 emissions into eco-friendly cement.

UK startup Seabound claims to have developed the world’s first commercial carbon capture system designed for cargo ships. This system is currently operational at sea.

Reasons for requesting a pause on the AI Act from the perspective of a climate advocate.

Antoine Rostand, the president and co-founder of the environmental intelligence company Kayrros, is concerned that the AI Act may negatively impact sustainability initiatives.

Reasons for requesting a pause on the AI Act from the perspective of a climate advocate.

Antoine Rostand, the president and co-founder of the environmental intelligence company Kayrros, is concerned that the AI Act may negatively impact sustainability initiatives.

Norwegian investment company ventures into the AI boom in the frigid north.

Aker is the most recent company venturing to the far north to take advantage of plentiful green energy and natural cooling for energy-intensive data centres.

Norwegian investment company ventures into the AI boom in the frigid north.

Aker is the most recent company venturing to the far north to take advantage of plentiful green energy and natural cooling for energy-intensive data centres.

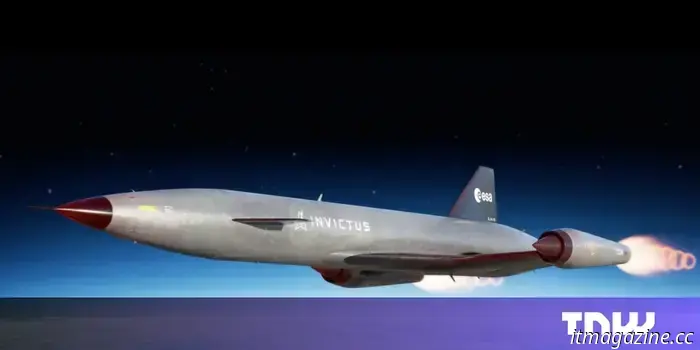

ESA's spaceplane initiative breathes new life into a defunct company's hypersonic engine.

The ESA is supporting a new initiative named Invictus, which seeks to create and launch a hydrogen-fueled spaceplane by 2031.

ESA's spaceplane initiative breathes new life into a defunct company's hypersonic engine.

The ESA is supporting a new initiative named Invictus, which seeks to create and launch a hydrogen-fueled spaceplane by 2031.

How WeTransfer sparked concerns regarding the training of AI on user data.

WeTransfer users were worried that the company planned to use their data to train AI models. This situation highlighted increasing concerns regarding trust in AI.