AI is capable of many tasks, but it still cannot create games or play them effectively.

As AI tools progress, we're increasingly encouraged to delegate more intricate tasks to them. Large language models (LLMs) can manage our emails, create presentations, design applications, generate videos, conduct internet searches, and summarize findings, among other capabilities. However, they continue to face significant challenges with video games.

This year, two prominent AI companies, Microsoft and Anthropic, have endeavored to make their models generate or engage with games, but the results are likely much more limited than many might imagine.

These ventures exemplify the current capabilities of generative AI—it has advanced considerably, yet it still cannot do everything.

Microsoft Creates Quake II

Generating video games presents challenges similar to those encountered in video generation—motion often appears strange and unnatural, and the AI may lose its grip on “reality” after a certain duration. Microsoft’s latest effort, accessible to anyone, is an AI-generated version of Quake II.

I tried it several times, and it offers a surreal experience, with blurry enemies appearing unexpectedly and the environment shifting as you move. Multiple times, upon entering a new room, the entrance vanished when I turned around—then, when I looked ahead again, the walls had altered.

This experience only lasts a few minutes before it ends and prompts you to start a new game—but if luck isn’t on your side, it may stop responding to your inputs even sooner.

Nevertheless, it's a fascinating experiment that I believe more people should witness. It allows you to see firsthand what generative AI excels at and where its current shortcomings lie. While it's impressive that an interactive game can be generated at all, it’s hard to envision anyone playing this tech demo and believing that AI will create the next Assassin’s Creed.

Yet, such assumptions do exist, largely due to the constant buzz surrounding AI. Even if someone is indifferent to artificial intelligence, it’s ubiquitous in discussions. The issue is that most of the information available to the public consists of exaggerated claims made by tech giants and their CEOs, which are picked up by the media.

As a result, people hear grandiose and often contradictory statements like these:

- "It has the potential to solve some of the world’s biggest problems, like climate change, poverty, and disease." (Bill Gates)

- "By 2025, companies like Meta will probably have AI that functions like a mid-level engineer capable of writing code." (Mark Zuckerberg)

- "Using AI effectively is now a fundamental expectation at Shopify; it's a versatile tool today and will only become more crucial." (Tobi Lutke, CEO of Shopify)

- "We are now confident we know how to build AGI as traditionally understood. By 2025, we may see AI agents “join the workforce” and significantly alter business output." (Sam Altman, CEO of OpenAI)

- "AI is potentially more dangerous than issues like faulty aircraft design or poor car manufacturing, as it could lead to civilization's destruction." (Elon Musk)

These statements are quite extreme, right? AI is both a savior and a threat, serves as a versatile tool for professionals and also replaces them—additionally, we might even attain sci-fi-level AGI this year. When such messages dominate, people begin to expect remarkable things from these technologies and envision office workers engaging with their computers as if they were characters from Star Trek.

However, that depiction doesn't align with reality. The actual scenario resembles a surreal, blurry Quake II with indistinct shapes for enemies. While ChatGPT-level LLMs were indeed a significant breakthrough in 2022 and provided much entertainment, for many applications that big tech is promoting today, AI simply doesn't possess sufficient capability. Its accuracy is too low, its ability to follow instructions is limited, context windows are too narrow, and it's trained more on internet noise than real-world knowledge.

Creating a video game is a complex task—after all, it requires teams of humans years to complete such projects. What about playing video games instead?

Claude “Plays” Pokémon Red

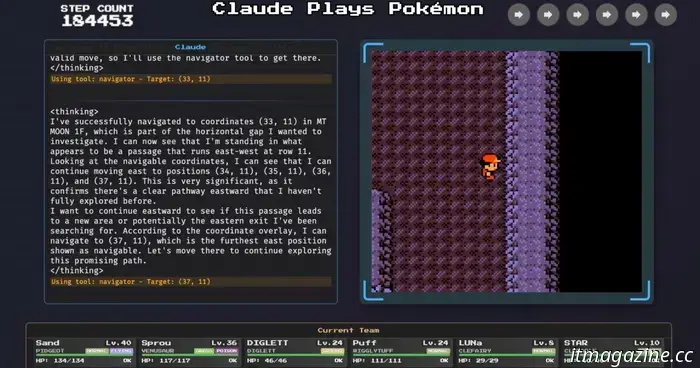

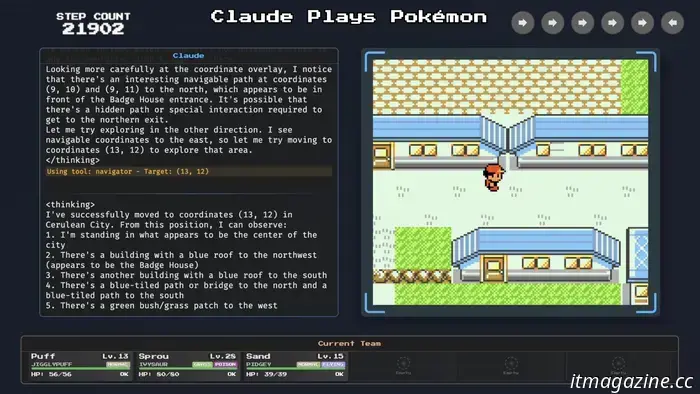

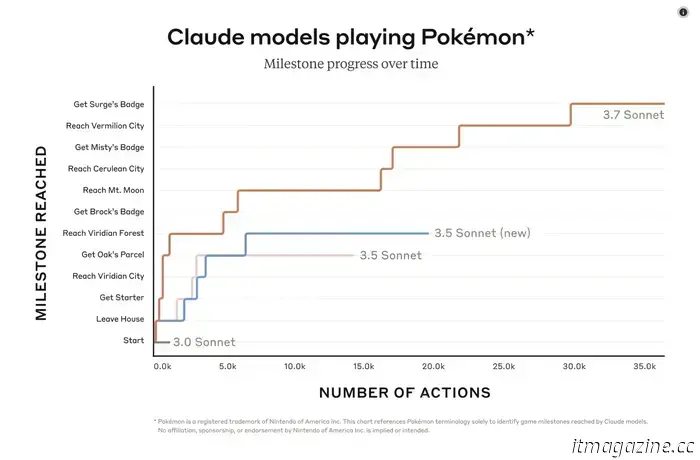

Interestingly, experimentation in this area is also ongoing. Anthropic’s latest model, Claude 3.7 Sonnet, has been playing Pokémon Red on Twitch for about two months, achieving the best performance by an LLM in playing Pokémon to date. However, he’s still far behind the average 10-year-old human player.

One major issue is speed—Claude takes thousands of actions over several days just to navigate through Viridian Forest.

Why is it so time-consuming? It's not due to an inability to strategize and win Pokémon battles—that's actually where he excels. The challenge lies in moving through the environment and avoiding obstacles like trees and buildings, which he struggles with. Claude hasn't been trained to play Pokémon, making it difficult for him to interpret the pixel art and its meanings.

Navigating maze-like areas such as Mt. Moon is especially tough for him; he has trouble mapping the area and avoiding retracing his steps. At one point,

Other articles

The Razr Plus, regarded as "one of the best folding phones," has received a $200 price reduction.

The Motorola Razr Plus 2024 is an enjoyable folding phone that received praise from our reviewers. You can save $200 by purchasing it today. While its regular price is $1,000, it is currently available for $800.

The Razr Plus, regarded as "one of the best folding phones," has received a $200 price reduction.

The Motorola Razr Plus 2024 is an enjoyable folding phone that received praise from our reviewers. You can save $200 by purchasing it today. While its regular price is $1,000, it is currently available for $800.

Due to tariff relief, the prices of phones and laptops aren’t rising.

The US government has updated its tariff policy regarding products imported from China by removing electronics such as phones, laptops, and processors from the list.

Due to tariff relief, the prices of phones and laptops aren’t rising.

The US government has updated its tariff policy regarding products imported from China by removing electronics such as phones, laptops, and processors from the list.

The future of rehabilitation for hands and wrists lies in engaging arcade games.

A group of researchers in Spain is utilizing video games created on a free platform along with a specialized controller to assist in the rehabilitation of hands and wrists.

The future of rehabilitation for hands and wrists lies in engaging arcade games.

A group of researchers in Spain is utilizing video games created on a free platform along with a specialized controller to assist in the rehabilitation of hands and wrists.

A $300 discount brings the price of this 16-inch Asus Vivobook laptop down to less than $1,000.

The Asus Vivobook S 16 comes with a 16-inch OLED display, an Intel Core Ultra 9 processor, and 16GB of RAM, priced at just $800 from Staples.

A $300 discount brings the price of this 16-inch Asus Vivobook laptop down to less than $1,000.

The Asus Vivobook S 16 comes with a 16-inch OLED display, an Intel Core Ultra 9 processor, and 16GB of RAM, priced at just $800 from Staples.

The Pitt averaged 10 million viewers in its inaugural season.

The emergency room series has achieved significant success for Max and has recently concluded its inaugural season on the streaming platform.

The Pitt averaged 10 million viewers in its inaugural season.

The emergency room series has achieved significant success for Max and has recently concluded its inaugural season on the streaming platform.

Mythic Quest has been terminated at Apple TV+, but viewers will receive one final episode.

The series will have the opportunity to present fans with a revised ending, as a new version of the season four finale is scheduled to be released next week.

Mythic Quest has been terminated at Apple TV+, but viewers will receive one final episode.

The series will have the opportunity to present fans with a revised ending, as a new version of the season four finale is scheduled to be released next week.

AI is capable of many tasks, but it still cannot create games or play them effectively.

Generative AI models have recently been exploring video games, highlighting both their progress and the distance they still need to cover.