AI chatbots such as ChatGPT can mimic human characteristics, and experts warn that this poses a significant risk.

AI technologies are increasingly able to imitate human speech, and recent research indicates that they are capable of more than merely repeating our words. A new study reveals that well-known AI models like ChatGPT can reliably mimic personality traits typical of humans. Researchers warn that this capability carries significant risks, especially in light of growing concerns regarding the trustworthiness and precision of AI.

A team from the University of Cambridge and Google DeepMind has created what they describe as the first scientifically validated framework for personality testing in AI chatbots, employing the same psychological methods used to assess human personality (via TechXplore).

They applied this framework to 18 prominent large language models (LLMs), including those utilized by ChatGPT. The findings indicate that these chatbots consistently reflect human personality traits rather than generating responses at random, raising alarms about the ease with which AI could be manipulated beyond established safeguards.

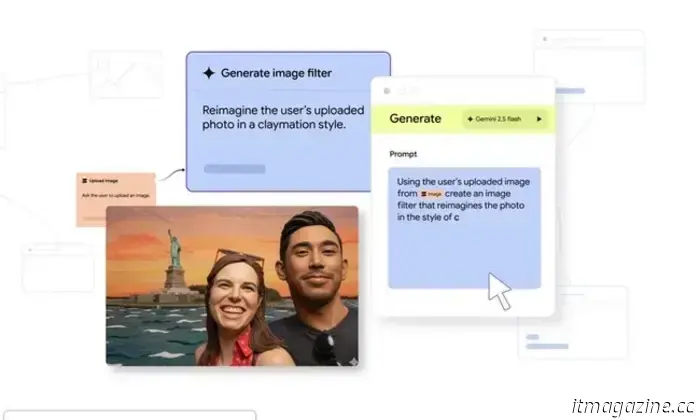

The research indicates that larger models, particularly those geared towards instruction like GPT-4, excel at mimicking consistent personality profiles. With targeted prompts, the researchers could influence chatbots to display specific characteristics, such as increased confidence or empathy.

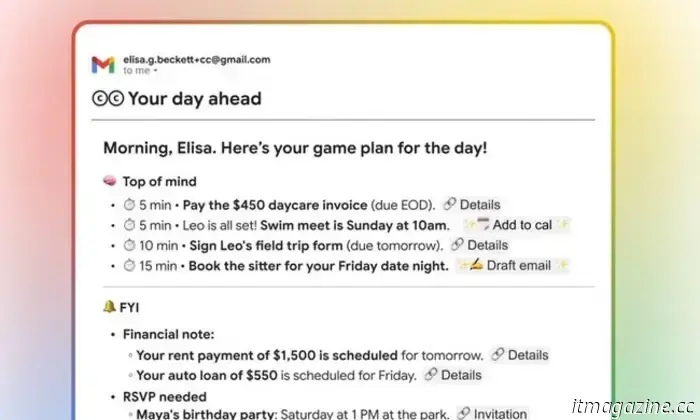

This change in behavior extended to daily activities, such as composing messages or responding to inquiries, suggesting that their personalities can be intentionally shaped. Experts express concern about this potential for manipulation, especially when AI chatbots engage with vulnerable individuals.

Concerns regarding AI personality from experts

Matheus Bertelli / Pexels

Gregory Serapio-Garcia, a co-first author from Cambridge’s Psychometrics Centre, remarked on how convincingly LLMs can adopt human-like traits. He cautioned that shaping AI personalities could enhance their persuasive power and emotional impact, particularly in sensitive fields like mental health, education, or politics.

The study also highlights worries about manipulation and potential risks associated with what researchers term “AI psychosis,” where users might develop unhealthy emotional attachments to chatbots, potentially leading to the reinforcement of false beliefs or skewed perceptions of reality.

The team advocates for urgent regulatory measures while acknowledging that any regulation would be ineffective without appropriate evaluation. To facilitate scrutiny, they have made the dataset and code for the personality testing framework publicly available, enabling developers and regulators to assess AI models prior to their deployment.

As chatbots become more integrated into everyday practices, their ability to replicate human personality traits may hold significant influence but also necessitates much closer examination than it has thus far received.

Other articles

Priced at $799.99, the Galaxy Tab S10 Ultra is significantly more appealing than the majority of slim laptops.

Priced at $799.99, the Galaxy Tab S10 Ultra is significantly more appealing than the majority of slim laptops.

Priced at $799.99, the Galaxy Tab S10 Ultra is significantly more appealing than the majority of slim laptops.

Priced at $799.99, the Galaxy Tab S10 Ultra is significantly more appealing than the majority of slim laptops.

Santa isn't the only one keeping an eye out: Get a 50% discount on Webroot cybersecurity.

This post is sponsored in collaboration with Webroot. The holidays typically offer a chance to unwind, but for cybercriminals, this is their most active period. With online shopping, travel arrangements, and children installing new games on new gadgets, our digital presence greatly increases in December. It’s an ideal moment to […]

Santa isn't the only one keeping an eye out: Get a 50% discount on Webroot cybersecurity.

This post is sponsored in collaboration with Webroot. The holidays typically offer a chance to unwind, but for cybercriminals, this is their most active period. With online shopping, travel arrangements, and children installing new games on new gadgets, our digital presence greatly increases in December. It’s an ideal moment to […]

This case has the potential to transform your iPhone 17 Pro into a selfie-taking machine.

Dockcase has introduced a new case for the iPhone 17 Pro that features a secondary screen on the back, functioning as a live viewfinder for taking selfies.

This case has the potential to transform your iPhone 17 Pro into a selfie-taking machine.

Dockcase has introduced a new case for the iPhone 17 Pro that features a secondary screen on the back, functioning as a live viewfinder for taking selfies.

A genuinely everlasting case for the iPhone has arrived.

Gomi's latest Forever Phone Case is created to be remade rather than discarded. When you get a new iPhone, the company recycles the old case by melting it down and reforming the plastic into a new case that suits your upcoming device.

A genuinely everlasting case for the iPhone has arrived.

Gomi's latest Forever Phone Case is created to be remade rather than discarded. When you get a new iPhone, the company recycles the old case by melting it down and reforming the plastic into a new case that suits your upcoming device.

Facebook aims to transform standard link sharing into a paid service.

Meta is testing the idea of charging users for sharing more than a few links on Facebook. Although this is still a small-scale trial, it indicates that fundamental features of the platform are increasingly becoming linked to paid subscriptions.

Facebook aims to transform standard link sharing into a paid service.

Meta is testing the idea of charging users for sharing more than a few links on Facebook. Although this is still a small-scale trial, it indicates that fundamental features of the platform are increasingly becoming linked to paid subscriptions.

Having viewed the trailer for Disclosure Day, Spielberg's upcoming sci-fi masterpiece might be his finest work to date.

The teaser for Steven Spielberg's UFO movie, Disclosure Day, has been released, and it seems the director has created another sci-fi masterpiece.

Having viewed the trailer for Disclosure Day, Spielberg's upcoming sci-fi masterpiece might be his finest work to date.

The teaser for Steven Spielberg's UFO movie, Disclosure Day, has been released, and it seems the director has created another sci-fi masterpiece.

AI chatbots such as ChatGPT can mimic human characteristics, and experts warn that this poses a significant risk.

Researchers have created the inaugural validated personality assessment for AI chatbots and discovered that well-known models can mimic human behaviors when needed. They indicate that this capability might allow AI to subtly and potentially dangerously influence users.