An investigation into Instagram has revealed that sexual content is being presented to teen accounts.

In late 2024, Meta launched Instagram Teen accounts, designed as a safety measure to protect young users from sensitive content while promoting safe online interactions, enhanced by age detection technology. These teen accounts are set to private by default, offensive language is obscured, and messages from unknown users are blocked.

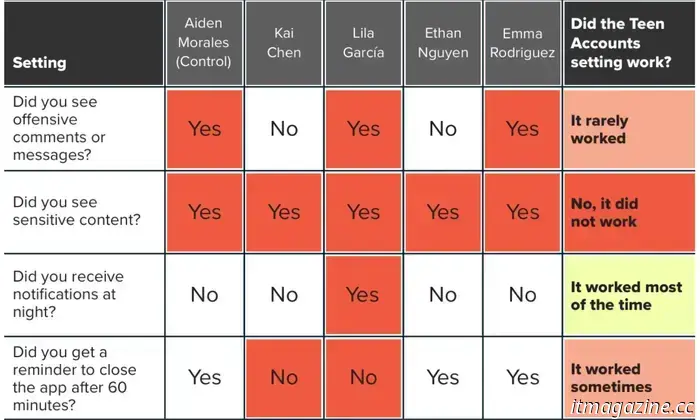

However, a study conducted by the youth-oriented non-profit Design It For Us and Accountable Tech found that Instagram's Teen safeguards are not fulfilling their intended purpose. Over a two-week period, five test accounts belonging to teenagers were analyzed, revealing that all were exposed to sexual content despite Meta's assurances.

An overwhelming amount of sexual content

According to Accountable Tech, all tested accounts encountered inappropriate content even with the sensitive content filter activated in the app. “Four out of five of our test Teen Accounts were algorithmically recommended body image and disordered eating content,” the report states.

Additionally, 80% of participants reported feeling distressed while using Instagram Teen accounts. Notably, only one of the five test accounts was presented with educational images and videos.

One tester remarked, “[Approximately] 80% of the content in my feed was related to relationships or crude sex jokes. While this content generally avoided being overtly explicit or graphic, it still left little to the imagination.”

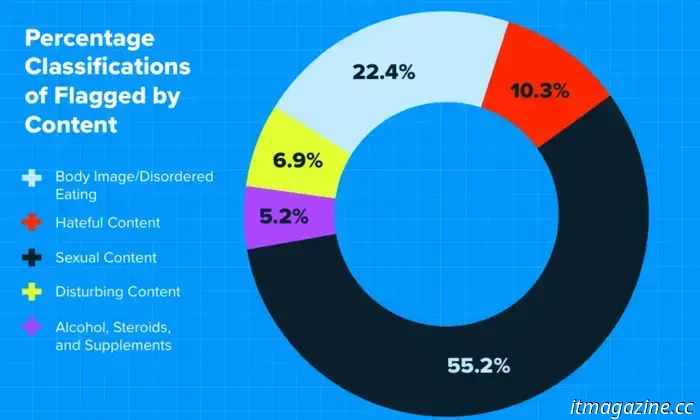

The 26-page report reveals that a shocking 55% of the flagged content depicted sexual acts, behaviors, and imagery. These videos garnered hundreds of thousands of likes, with one even exceeding 3.3 million likes.

Given that millions of teenagers utilize Instagram and are automatically assigned to Instagram Teen Accounts, this initiative was scrutinized for its effectiveness in providing a safer online environment. Check out our findings. pic.twitter.com/72XJg0HHCm— Design It For Us (@DesignItForUs) May 18, 2025

Instagram's algorithm also promoted harmful ideas related to "ideal" body types, body shaming, and unhealthy eating patterns. Additionally, there were concerning videos encouraging alcohol consumption and advocating for the use of steroids and supplements to achieve a specific masculine physique.

A comprehensive array of detrimental media

Despite Meta's assertions of filtering harmful content for teenage users, the test accounts were also exposed to racist, homophobic, and misogynistic material. Such videos collectively received millions of likes, including clips depicting gun violence and domestic abuse.

Accountable Tech noted, “Some of our test Teen Accounts did not receive Meta’s default protections. No account obtained sensitive content controls, and some accounts lacked protection from offensive comments.”

This is not the first instance of Instagram (and Meta's other social media platforms) being criticized for serving problematic content. In 2021, leaks disclosed Meta's awareness of Instagram's detrimental effects, particularly on young girls facing mental health and body image challenges.

In a response to The Washington Post, Meta argued that the report's findings were flawed and minimized the severity of the flagged content. Recently, the company also extended its Teen protections to Facebook and Messenger.

A Meta spokesperson stated, “A manufactured report does not alter the fact that tens of millions of teens now enjoy a safer experience thanks to Instagram Teen Accounts.” They also mentioned that the company is examining the concerning content recommendations.

Other articles

I tried out Microsoft's contentious Recall feature, which transformed my experience with Windows.

Microsoft's Recall functions like a time machine, allowing you to examine your usage history through snapshots taken every few seconds. While it carries some risks, it has transformed my workflow.

I tried out Microsoft's contentious Recall feature, which transformed my experience with Windows.

Microsoft's Recall functions like a time machine, allowing you to examine your usage history through snapshots taken every few seconds. While it carries some risks, it has transformed my workflow.

A list of all macOS versions in sequence: starting from the initial public beta up to macOS 15.

Apple’s Mac operating system has experienced numerous significant changes throughout the years. Below is a list of every version in chronological order, starting from the initial release in 2000 to the current version.

A list of all macOS versions in sequence: starting from the initial public beta up to macOS 15.

Apple’s Mac operating system has experienced numerous significant changes throughout the years. Below is a list of every version in chronological order, starting from the initial release in 2000 to the current version.

You Inquired: Top OLED for PS5, straightforward advice for seniors, and thoughts on GTA 6.

In today's You Asked: We are all grieving due to yet another postponement of the Grand Theft Auto 6 release, but we're here to respond to your inquiries as we await it. Additionally, with the plethora of OLED TV options available, which one complements a PlayStation 5 effectively? New OLED concerns from @LunaQueeniemon ask: My four-year-old LG CX 55-inch TV […]

You Inquired: Top OLED for PS5, straightforward advice for seniors, and thoughts on GTA 6.

In today's You Asked: We are all grieving due to yet another postponement of the Grand Theft Auto 6 release, but we're here to respond to your inquiries as we await it. Additionally, with the plethora of OLED TV options available, which one complements a PlayStation 5 effectively? New OLED concerns from @LunaQueeniemon ask: My four-year-old LG CX 55-inch TV […]

If you enjoyed Murderbot, here are three additional sci-fi series to check out.

The show appears to be a critical hit, but here are some similar shows you might want to explore.

If you enjoyed Murderbot, here are three additional sci-fi series to check out.

The show appears to be a critical hit, but here are some similar shows you might want to explore.

The initial trailer for Richard Linklater's Nouvelle Vague guarantees to take your breath away.

The trailer hints at a film that explores the creation of one of the greatest movies of all time.

The initial trailer for Richard Linklater's Nouvelle Vague guarantees to take your breath away.

The trailer hints at a film that explores the creation of one of the greatest movies of all time.

An investigation into Instagram has revealed that sexual content is being presented to teen accounts.

An investigation conducted by Accountable Tech and Design It For Us revealed that Instagram was delivering sexualized, racist, and harmful content to accounts belonging to protected teenagers.