An expert discusses the smartphones that AI enthusiasts should consider to maximize the capabilities of Gemini and ChatGPT.

One of the most noticeable — and frankly, the least exciting — trends in the smartphone sector over recent years has been the constant discussion about AI capabilities. Particularly, tech companies frequently highlighted how their newest mobile processors would facilitate on-device AI operations like video creation.

We are approaching that point, if not entirely there yet. Despite the buzz surrounding hit-and-miss AI features for mobile users, the conversations rarely extended beyond flashy presentations of new processors and constantly improving chatbots.

However, it was only when the Gemini Nano was notably absent from the Google Pixel 8 that the public recognized the essential role of RAM capacity for AI in mobile devices. Shortly thereafter, Apple also indicated that its Apple Intelligence feature would only be available on devices with a minimum of 8GB of RAM.

Yet, the concept of an "AI phone" encompasses more than just memory capacity. The efficiency of your phone in executing AI-driven tasks also relies on unseen RAM optimizations and the storage components. And it's not just about the storage capacity.

Innovations in memory for AI phones

Micron / Digital Trends

Digital Trends spoke with Micron, a leading provider of memory and storage solutions, to discuss the importance of RAM and storage for AI applications in smartphones. The advancements presented by Micron should be on your radar when looking for a premium smartphone.

The company's latest offerings include the G9 NAND mobile UFS 4.1 storage and the 1γ (1-gamma) LPDDR5X RAM modules designed for flagship smartphones. So, how do these memory solutions enhance AI capabilities in smartphones, beyond simply increasing capacity?

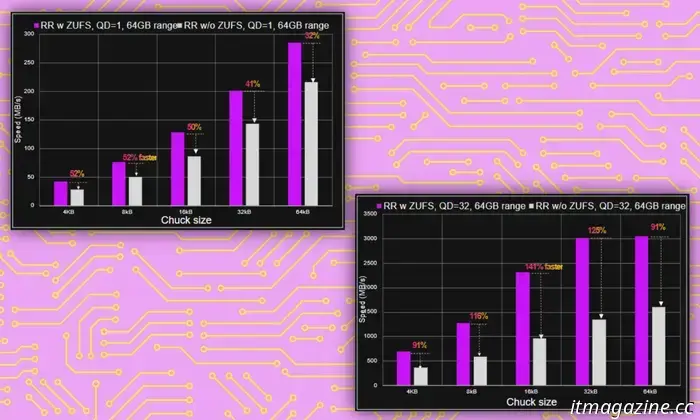

Let’s begin with the G9 NAND UFS 4.1 storage. The key benefits include reduced power consumption, lower latency, and increased bandwidth. The UFS 4.1 standard achieves peak sequential read and write speeds of 4100 MBps, representing a 15% improvement over the UFS 4.0 generation while also decreasing latency.

Additionally, Micron’s next-generation storage modules can support capacities up to 2TB. They have also been made more compact, making them ideal for foldable phones and the next wave of sleek devices like the Samsung Galaxy S25 Edge.

Micron / Digital Trends

Regarding RAM advancements, Micron has introduced what it calls 1γ LPDDR5X RAM modules. These achieve peak speeds of 9200 MT/s, allow for 30% more transistors due to their smaller size, and consume 20% less power. Micron has previously provided the slightly slower 1β (1-beta) RAM found in the Samsung Galaxy S25 series smartphones.

The interaction between storage and AI

Ben Rivera, Director of Product Marketing at Micron’s Mobile Business Unit, explains that Micron has made four significant enhancements to their latest storage solutions to facilitate quicker AI operations on mobile devices: Zoned UFS, Data Defragmentation, Pinned WriteBooster, and Intelligent Latency Tracker.

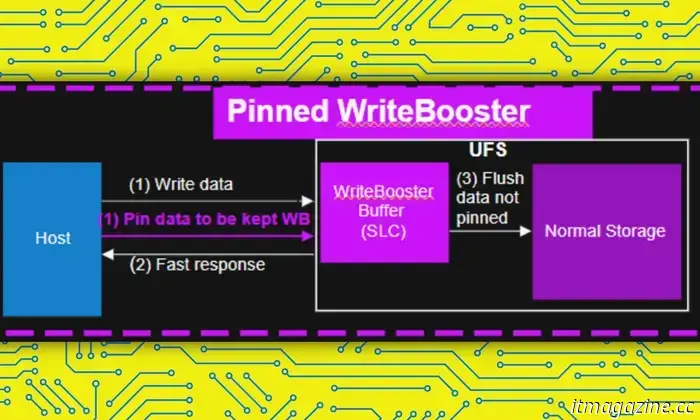

“This feature allows the processor or host to identify and isolate or ‘pin’ frequently used data on a smartphone to a section of the storage device called the WriteBooster buffer (similar to a cache) for rapid access,” Rivera describes the Pinned WriteBooster feature.

Micron / Digital Trends

Every AI model — like Google Gemini or ChatGPT — that operates on-device requires its own set of instruction files stored locally on the mobile device. For instance, Apple Intelligence needs 7GB of storage for its operations.

When executing a task, it's not possible to load the entire AI package into RAM, as space is also needed for vital functions like making calls or interacting with other key apps. To manage space on the Micron storage module, a memory map is created that only loads the necessary AI weights from storage into RAM.

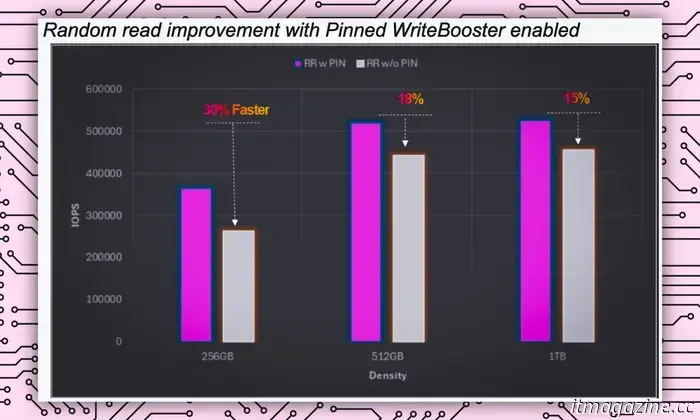

When resources are limited, a faster data exchange becomes critical. This ensures AI tasks are completed without compromising the performance of other essential operations. With Pinned WriteBooster, this data transfer is accelerated by 30%, enabling prompt execution of AI tasks.

For example, if you need Gemini to access a PDF for review, the quick memory swap allows the relevant AI weights to transfer swiftly from storage to RAM.

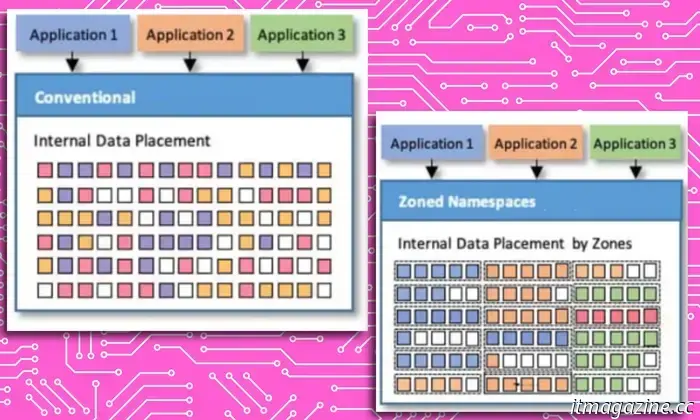

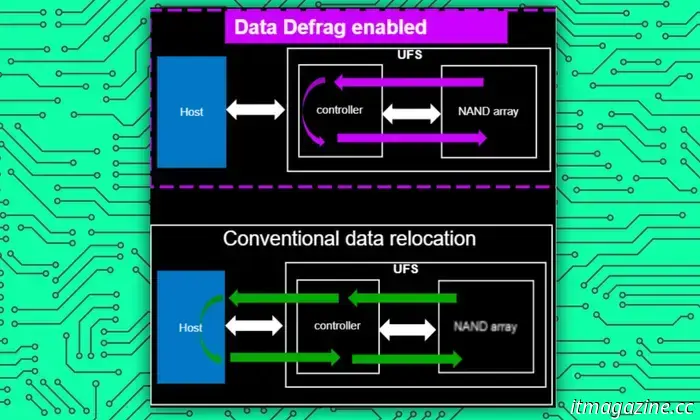

Next is Data Defrag. Think of it as an organizer for a desk or cabinet that ensures items are systematically arranged within designated areas for easy retrieval.

Micron / Digital Trends

In smartphones, as more data accumulates over time, it is often stored in a disorganized manner. This disorganization makes it challenging for the system to access specific files, leading to slower performance.

Rivera states that Data Defrag not only enhances data organization but also optimizes the interaction pathway between the storage and device controller. In doing so, it improves data read speed by an impressive 60%, which accelerates all interactions with the device, including AI processes.

“This feature can speed up AI functions, such as when a generative AI model retrieves data from storage to memory, enabling faster reading from storage into

Other articles

The showrunners of The Last of Us discuss the transformative second episode of season 2.

There's no turning back as season 2 of The Last of Us arrives at a pivotal point in the narrative.

The showrunners of The Last of Us discuss the transformative second episode of season 2.

There's no turning back as season 2 of The Last of Us arrives at a pivotal point in the narrative.

The five most promising scaleups in France are joining TECH5's 'Champions League of Tech.'

Five French scaleups have been selected for TECH5, known as the "Champions League of Tech." They will now vie for the title of the leading scaleup in Europe.

The five most promising scaleups in France are joining TECH5's 'Champions League of Tech.'

Five French scaleups have been selected for TECH5, known as the "Champions League of Tech." They will now vie for the title of the leading scaleup in Europe.

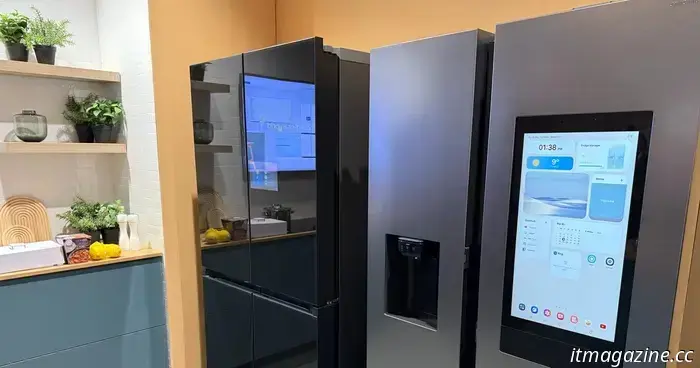

Samsung is leveraging AI to forecast appliance failures – but there’s a drawback.

Samsung is integrating AI across various products, including its home appliances. We have already reported on the incorporation of AI in Samsung washing machines and its Bespoke AI Jet Ultra. Additionally, the company is utilizing AI to keep an eye on your appliances and predict potential breakdowns. [...]

Samsung is leveraging AI to forecast appliance failures – but there’s a drawback.

Samsung is integrating AI across various products, including its home appliances. We have already reported on the incorporation of AI in Samsung washing machines and its Bespoke AI Jet Ultra. Additionally, the company is utilizing AI to keep an eye on your appliances and predict potential breakdowns. [...]

The iPhone 17 Pro might come with an exciting, essential accessory that surpasses a traditional case.

According to a recent rumor, Apple might be considering using the camera module of the iPhone 17 Pro as the spot for a unique new type of accessory.

The iPhone 17 Pro might come with an exciting, essential accessory that surpasses a traditional case.

According to a recent rumor, Apple might be considering using the camera module of the iPhone 17 Pro as the spot for a unique new type of accessory.

NYT Crossword: solutions for Monday, April 21.

The crossword puzzle in The New York Times can be challenging, even if it's not the Sunday edition! If you're facing difficulties, we're available to assist you with today’s clues and solutions.

NYT Crossword: solutions for Monday, April 21.

The crossword puzzle in The New York Times can be challenging, even if it's not the Sunday edition! If you're facing difficulties, we're available to assist you with today’s clues and solutions.

Intel Nova Lake CPUs might necessitate new motherboards featuring the LGA 1954 socket.

Intel's upcoming desktop CPUs are anticipated to adopt a new socket.

Intel Nova Lake CPUs might necessitate new motherboards featuring the LGA 1954 socket.

Intel's upcoming desktop CPUs are anticipated to adopt a new socket.

An expert discusses the smartphones that AI enthusiasts should consider to maximize the capabilities of Gemini and ChatGPT.

A Micron executive explains how improvements in RAM and storage technology will accelerate AI functions on the upcoming generation of smartphones.