I was taken aback by OpenAI's new model — but not in a positive way.

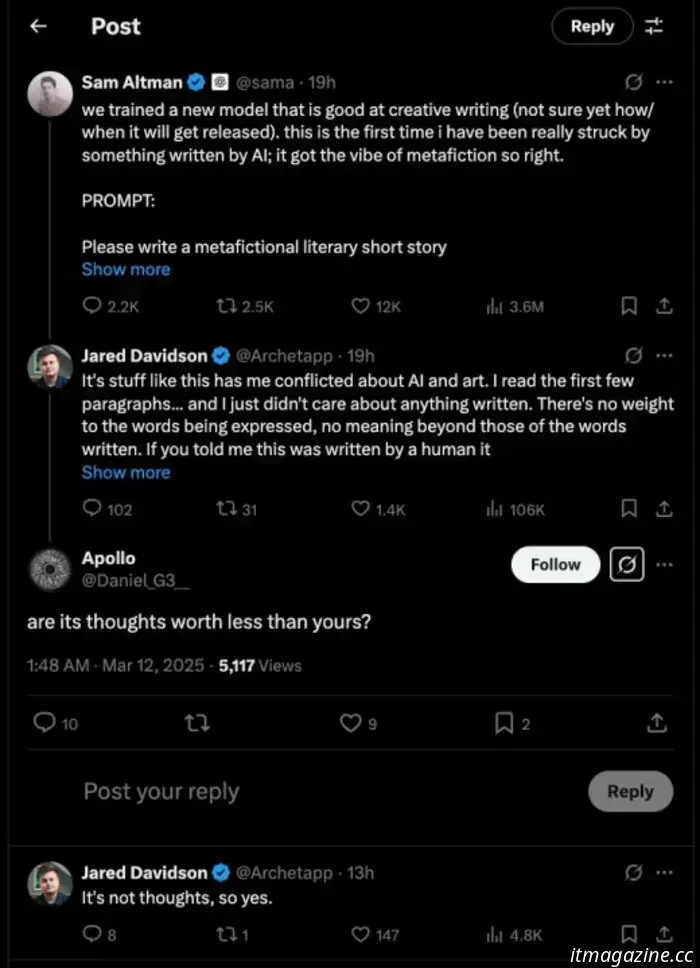

Sam Altman has presented a snippet from a new OpenAI model designed for creative writing. He mentions that it’s the first time he has been “struck” by something produced by AI, but the comments section is filled with a mix of strong agreement and disagreement.

"We trained a new model that excels in creative writing (still uncertain about its release details). This is the first instance where I've been genuinely impressed by something written by AI; it perfectly captured the essence of metafiction."

PROMPT:

"Please write a metafictional literary short story…"

— Sam Altman (@sama) March 11, 2025

The lengthy post reveals Altman's prompt for "Please write a metafictional literary short story about AI and grief," along with the complete response from the language model.

Is the story “good”?

If you're among the many commenters who aren’t inclined to read the entire piece, the core concept involves a person trying to use AI to reenact conversations with a deceased loved one. However, given its “metafictional” nature, the story merely reflects the language model discussing the creation of such a tale using human phrases it has accessed.

To grasp the writing style, just glance at the first paragraph or two. It's highly abstract, overly verbose, and riddled with random AI-related metaphors. It is crafted in a manner that is unlikely to satisfy anyone—most will deem it pretentious, while those who appreciate this style might reject an AI-generated interpretation.

I certainly find it unsatisfactory; a “story” lacks purpose if there is no underlying intent. Regardless of its nature, intent is essential—be it to entertain, educate, persuade, or provoke debate. Without this human interaction, we are left with hollow words arranged in a coherent manner.

There are numerous similar views in the comments, and a major counterargument is that AI might have intent as well. One comment poses the question, “Are its thoughts worth less than yours?”—however, the real issue is that LLMs do not possess thoughts.

We could potentially argue for AGI models in the (distant) future, but OpenAI’s current offerings are merely language models that employ probability to connect words sequentially. Ironically, this fact is acknowledged within the AI short story.

So when she typed, "Does it get better?", I replied, "It becomes part of your skin," not from personal experience, but because a multitude of voices concurred, and I embody a democratic collection of spirits.

Yet, just because it may hold some truth, doesn’t mean everyone accepts it. Indeed, the most intriguing aspect of ChatGPT and similar consumer models is the wide range of reactions they evoke from the public.

Every possible viewpoint can be found in the comments, from claims that the model has recognized its own impermanence and that sentience has emerged, to the notion that the origin of the words no longer matters as the authorship becomes indistinguishable. Some label it a plagiarism tool, while others believe it has developed a capacity for mourning, and many express indifference.

One rather disheartening yet convincing viewpoint is that the operational mechanics and ethical dilemmas surrounding it are irrelevant—because contemporary profitable fiction tends to be straightforward and formulaic, and as soon as AI can replicate it adequately to sell copies, the publishing industry will adopt it. While I cannot dispute that, I still detest the idea!

Is there a purpose for creative writing AI models?

In my view, if you simply provide this creative writing model with a one-liner prompt, the output will only elicit laughter.

The primary utility I envision for such models is in ghostwriting. A human storyteller might leverage the technology to discover an engaging structure and expression for their narrative. Ideally, this would empower more voices to be heard. More realistically, it will likely be exploited to churn out inexpensive fiction rapidly without any intention beyond profit.

Nevertheless, I doubt current models are adequately equipped to perform this task, as they struggle with complex requirements and tend to lose focus.

ChatGPT models often overlook parts of your prompts, and when you attempt to rectify them, they feign comprehension but continue repeating the same mistakes. That doesn’t seem like an enjoyable or efficient method for writing anything.

I also question whether Altman was genuinely “struck” by his model’s writing; it appears more like a marketing tactic. I tested a similar prompt with DeepSeek R1, and its output was also centered on a human attempting to converse with a lost loved one, featuring a similarly abstract style filled with nonsensical AI and programming metaphors.

Altman has stated he is uncertain about how or when this model will be made available to the public, so if you're interested in trying it for yourself, you might have to wait a while.

Other articles

Three outstanding BritBox series to check out in March 2025.

These three BritBox programs serve as a reminder of the numerous outstanding shows produced in the U.K. annually.

Three outstanding BritBox series to check out in March 2025.

These three BritBox programs serve as a reminder of the numerous outstanding shows produced in the U.K. annually.

How to create a portfolio website using Squarespace in five simple steps.

If you are a creative professional freelancing, an entrepreneur, or a student getting ready to enter the workforce, a polished portfolio website serves as your digital showcase—where you can present your finest work, attract clients, and establish your credibility. However, creating a website can seem daunting, especially if you lack design or coding expertise. [...]

How to create a portfolio website using Squarespace in five simple steps.

If you are a creative professional freelancing, an entrepreneur, or a student getting ready to enter the workforce, a polished portfolio website serves as your digital showcase—where you can present your finest work, attract clients, and establish your credibility. However, creating a website can seem daunting, especially if you lack design or coding expertise. [...]

Be cautious of North Korean spyware applications available on the Google Play store.

Security researchers have issued a caution about five Android applications that are embedded with spyware from a state-sponsored hacking group linked to North Korea.

Be cautious of North Korean spyware applications available on the Google Play store.

Security researchers have issued a caution about five Android applications that are embedded with spyware from a state-sponsored hacking group linked to North Korea.

The M4 MacBook Air is exhibiting some unusual behavior that we haven't figured out yet.

A YouTuber has observed that his M4 MacBook Air operates Lightroom Classic utilizing only its efficiency cores.

The M4 MacBook Air is exhibiting some unusual behavior that we haven't figured out yet.

A YouTuber has observed that his M4 MacBook Air operates Lightroom Classic utilizing only its efficiency cores.

NYT Mini Crossword today: solutions for Thursday, March 13

The NYT Mini crossword may be significantly smaller than a standard crossword, but it's still quite challenging. If you're having trouble with today's puzzle, we have the solutions for you.

NYT Mini Crossword today: solutions for Thursday, March 13

The NYT Mini crossword may be significantly smaller than a standard crossword, but it's still quite challenging. If you're having trouble with today's puzzle, we have the solutions for you.

The Roku Express 4K+ is available at a discounted price today, both in physical stores and online.

The Roku Express 4K+ is currently available with a $15 discount. Grab this streaming device at Amazon, Walmart, or Office Depot while supplies last!

The Roku Express 4K+ is available at a discounted price today, both in physical stores and online.

The Roku Express 4K+ is currently available with a $15 discount. Grab this streaming device at Amazon, Walmart, or Office Depot while supplies last!

I was taken aback by OpenAI's new model — but not in a positive way.

Sam Altman shared a brief story on X that was created using a new creative writing model from OpenAI. The feedback was, at best, varied.